About Language Identification

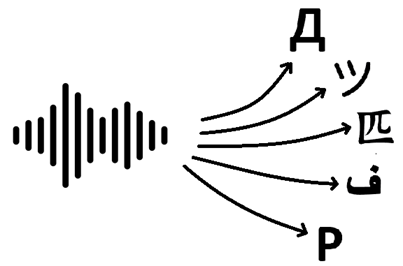

Phonexia's Language Identification technology processes audio recordings to estimate the probabilities of different languages present in the recording. The technology is text-independent, meaning that language recognition is based on phonetic and suprasegmental features (such as stress, intonation, rhythm) rather than on the spoken text itself. Language Identification can recognize both languages and dialects/varieties for some selected languages.

Typical use cases

- Preprocessing audio for other speech recognition technologies (such as Speaker Identification or Speech to Text).

- Fast filtering of audio based on language.

How does it work?

The Language Identification algorithm comprises the following steps:

- Filtering out segments of silence and technical signals using Voice Activity Detection (VAD).

- Segmenting the voice into chunks of 10 seconds each.

- Generating a languageprint for each 10-second chunk.

- Creating an averaged languageprint from these chunks.

- Normalizing and transforming the embeddings derived from the languageprint to estimate the likelihood of each language.

- Utilizing the user-defined subset of languages or groups to compute the posterior probabilities for each language or group. (see the section about languages and groups).

When creating languageprints from the 10-second chunks, any final chunk of speech that is smaller than 10 seconds is discarded for accuracy purposes.

For instance, if there is a 35-second recording with 23 seconds of speech, the system will process two 10-second batches of speech and discard the final batch containing 3 seconds of speech.

Accuracy evaluation

A straightforward metric for measuring the accuracy of Language Identification, as employed in our evaluations, is calculated as the ratio of correctly classified recordings to the total number of recordings in the dataset.

Even though the Language Identification technology provides probabilities for all languages, this metric only considers the recording to be correctly classified if the ground truth language class was assigned the highest probability.

Specifying languages and groups

The Language Identification technology uses prior weights for each available language, which represent the initial belief for each language being correct. These weights range from 0 to 1 for each language and are subsequently normalized by the system to sum up to 1. By default, the prior weights are set to 1 for all languages, meaning that all languages have the same prior probability of being detected. The user can choose to detect only a subset of the available languages by setting the prior weights of the remaining languages to 0, thereby preventing these languages from appearing in the results. Excluding a language works at the level of score estimation, not at the level of languageprint extraction. This means, that the language is still recognized at the lower level, but its probability is then redistributed to the other languages.

In addition to limiting the recognized languages, users can group multiple languages into custom groups. When a group is formed from a set of languages, the scores of these languages are combined and reported as a group score. When a group is defined, all the language scores remain in the response. The language scores that make up the group are included under that group. One possible use case for using groups is to group individual language dialects into encapsulating groups. You can find predefined groups here.

See the list of available languages.

Specifying speech length

Since Language Identification technology identifies languages based on voice rather than audio alone, limiting the amount of audio processed to speed up the process may be counterproductive if insufficient speech remains in the resulting recording. Therefore, it is recommended to control the amount of data processed by setting the speech length parameter. This parameter ensures that the technology uses only the necessary amount of data to meet the specified value.

The amount of speech is closely related to the technology's accuracy, but the relationship is not linear. See the table in the Measurements section for practical example of this relationship on an example dataset.

The relationship between amount of speech and Language Identification accuracy is generally data-dependent, and thus the above table may not relate to your data specifically.

The optimal balance between accuracy and performance varies for each user. Begin with the reference table above and adjust this parameter to achieve your desired balance. Note that Language Identification technology excludes the last batch of voice data if it is less than 10 seconds, as stated in the How Does It Work section.

Example measurements

Below is a sample measurement performed on an example dataset consisting of 2,306 recordings in 13 languages, ranging from several seconds to several minutes. The accuracy evaluation is done using the metric described in the Accuracy Evaluation section, where higher accuracy indicates better performance.

The measurement was performed with 1 instance of Language Identification microservice running on an NVIDIA RTX 4000 SFF Ada Generation Graphical Processor with 15GB of RAM.

| Amount of speech | Accuracy [%] | FTRT | Speed up |

|---|---|---|---|

| unlimited | 74.3 | 322.25 | 1.0 |

| 20s | 73.6 | 766.77 | 2.38 |

| 10s | 71.9 | 1079.0 | 3.45 |

| 5s | 67.0 | 1709.3 | 5.46 |

The peak memory consumption for the unlimited amount of speech was 3.17GB of RAM, and 2.82GB of GPU memory.

Processing the audio on a GPU highly optimizes the performance of the system. The performance is significantly lower when processing the same dataset on a CPU. For example, running 8 instances of the microservice on 8 cores of AMD Ryzen 9 7950X3D gives approximately 42.4 FTRT for the unlimited amount of speech, with a peak RAM consumption of 14.28GB, which is around 5.3 FTRT and 1.8GB per core respectively. Processing with one instance on CPU is therefore around 60 times slower than on GPU.

The FTRT (Faster Than Real Time) column indicates the speed-up factor of the processing relative to the audio duration. An FTRT of 10 means that 10 seconds of input audio is processed in 1 second of CPU time.

Although the dataset includes only 13 languages, scoring was conducted across all 140 languages with equal prior weights. Accuracy could be enhanced by limiting recognition to these 13 languages.

These measurements serve as examples only. Actual performance may vary based on the hardware and dataset employed.

FAQ

How does the system handle non-native speakers (e.g., a Czech speaking Spanish)?

Non-native speech presents a great challenge to the system. We expect that the accuracy would drop for such data. However, the system's performance may be influenced by many factors such speech length, audio quality, speaker's pronunciation, etc. Thus, it is difficult to predict the behavior of the system in every particular use case. Limiting the set of scored languages may help this use-case.

What if there are multiple languages spoken in one recording?

The system was trained to classify recordings containing only a single language. In cases where multiple languages are present in a single recording, various scenarios can occur depending on many factors such as the length and quality of each segment. One possible result is that one of the languages may receive a high probability, while the others may receive lower probabilities. This behavior can vary significantly depending on the data so it is strongly recommended to use audio in a single language.

What is the typical processing speed of the system?

The processing speed is highly dependent on the hardware it runs on. You can find an example measurements in the Measurements section.

What is the typical accuracy of the system?

The accuracy of the system is highly dependent on the dataset used. Typically, the accuracy ranges from 70% to 80%. You can find an example measurements in the Measurements section.

What if the accuracy is not high enough?

If the system's accuracy does not meet your requirements, consider limiting the list of recognized languages to a more specific subset expected to be present in the audio.

For example, in the dataset mentioned in the Specifying Speech Length section, by restricting the list to only the 13 languages present in the dataset, accuracy can be increased to approximately 80%.